We recently migrated two FileMaker solutions from internally stored FileMaker containers to AWS S3 cloud storage, so naturally, we thought to document the experience for others who might be considering the same option. As one would expect, there were a few unknowns going into this project, so our goal with this article is to provide some pointers and documentation for future reference.

Container Field History

FileMaker has had an option for container fields for a very long time. Since their introduction, you have been able to store the container data (files, images, any binary data) internally to the FileMaker file. This means that if you insert a 1 GB file into a container field, the size of your FileMaker file grows by 1 GB.

This growth is undesirable for a few reasons:

- A corruption of the container data can cause a corruption of your FileMaker file.

- The backups cannot really be optimized by FileMaker Server, resulting in higher backup storage requirements.

- The files can get very large, making working with the database more difficult when you need to move copies around or manage the deployment process.

Claris introduced external storage with FileMaker 12 which was (and still is) a huge improvement. FileMaker Server manages the files, so there is no need for a file server at the operating system level, which keeps things blessedly simple. With external storage, the problems encountered when using internal storage are largely eliminated. The FileMaker file stays small. And FileMaker Server’s backup process can optimize the backups and not keep redundant copies of unchanged container files.

Other than for an occasional preference record, or other ‘single record’ tables, we virtually never use internal storage any longer. Most container data is now stored as external secure storage.

Why Move Container Data out of FileMaker Entirely?

So if external containers are so great, why should you consider moving the container data out of FileMaker entirely?

When the number of files and aggregate size of all container data starts to add up, you’ll eventually run into an area where getting the container data out of FileMaker entirely makes sense. Some of the benefits include the following.

- Reduce your FileMaker Server storage requirements. S3 is a distributed storage system and your files are replicated across availability zones. S3 can also be configured to replicate across geographical zones to address regional disaster concerns. This setup can greatly simplify your FileMaker offsite backup configuration and also simplify moving the FileMaker file between servers when necessary.

- Move large file transfers off your FileMaker Server and take advantage of Amazon’s content delivery network.

- S3 data can be made directly accessible to external web servers.

One of our clients was able to reduce their FileMaker Server data storage requirements from nearly 1 TB to about 200 MB.

AWS costs are minimal compared to FileMaker server-hosted drive space. As of this writing, the under 50 TB tier is 2.3 cents per GB.

Setting Up the AWS S3 Account

Our clients needed to be the primary owner of their AWS accounts, so we walked them through the initial setup, which is pretty straightforward.

When working with AWS or any API, it’s crucial to manage access and security properly. Store your access credentials and API keys in a secure but accessible location to avoid wasting time searching for them later. We have to start a surprising number of projects with a time-consuming hunt for passwords and credentials that have not been kept track of well.

It’s important to resist the temptation to use your root AWS account for API access. Doing so violates Amazon’s best practices and the fundamental security principle of least privilege.

Instead, use your root account to create a separate AWS user or identity with limited privileges specifically for interacting with the S3 API. Here is a great guide – Root user best practices for your AWS account – which details the steps to accomplish this foundation.

Once we had access to the client account, we set up the AWS bucket using the AWS User Guide, including permissions and service keys. (As with any API, you’ll be given some keys when setting up the bucket that you’ll need for later.)

Testing in Postman

When working with APIs, Postman has become our go-to tool for testing API calls. If you haven’t done so yet, check out our FileMaker DevCast episode for a Postman demo and a few pointers. The Postman collection for AWS S3 is well-documented and can be downloaded through this link.

After reviewing the workflow with the client, we narrowed down the primary API calls to these four:

- “List” (get a list of objects in a bucket)

- “Copy Object” (copy a file to a bucket)

- “Object” (retrieve a file from a bucket)

- “Delete Object” (delete a file from a bucket)

Once we had the authentication configured and had successfully tested these calls, we moved on to implementing them into the FileMaker solution.

Insert from URL

There are three methods of authentication with Amazon S3:

- HTTP Authorization header, where each authentication value is defined and sent in the request header.

- Query string parameter, where each authentication value is combined into a string to form a URL.

- Browser-based uploads using HTTP POST, where the entire request is handled in a browser window.

Initially, we wanted to use the recommended method of the HTTP Authorization header, but couldn’t get the S3 API to return a successful response. We dug a little deeper and discovered that Jason Wood with Defined Database had written a custom function to build a pre-signed URL for use with the Query String authentication method. Using this custom function, we were able to successfully authenticate and upload to the S3 bucket.

Jason has also written a comprehensive blog post on using S3 with FileMaker, which has additional details about the custom function.

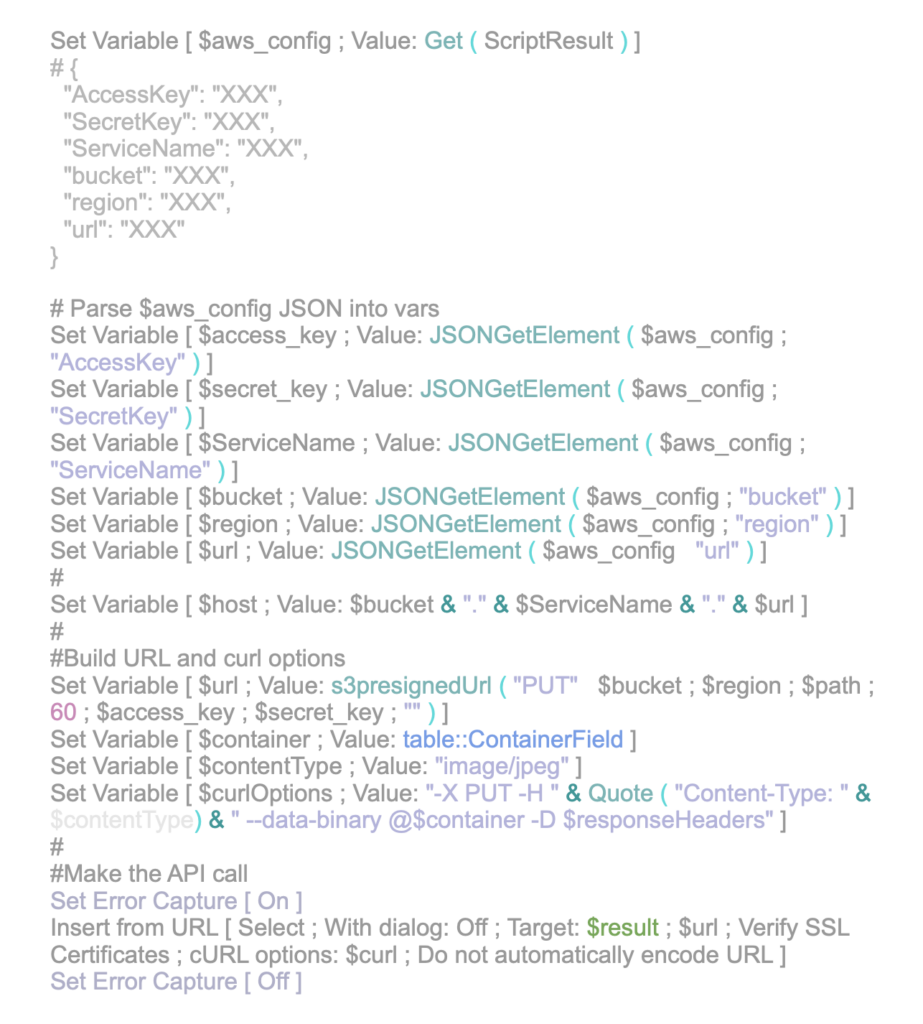

Once we had all the elements working, we were able to add the AWS authentication values to the solution and build the “Insert from URL” calls:

Successful Response

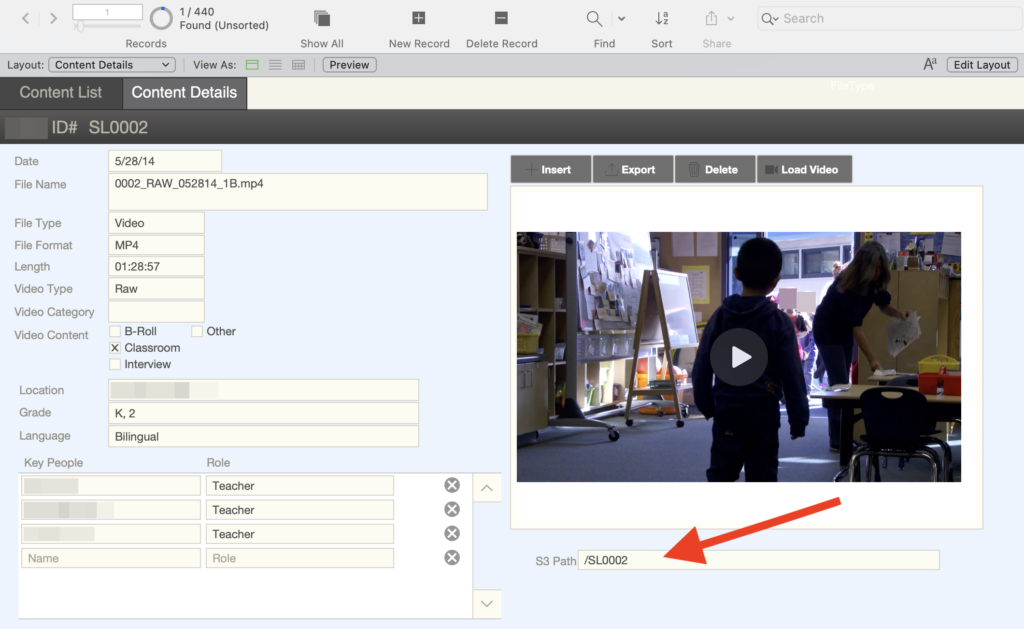

After a successful response, we stored the S3 path to the uploaded image, generated a thumbnail image, then cleared the local container since it was no longer needed to store the file.

- Deleting a file from the bucket is the same call, but uses “DELETE” as the method.

- Viewing a file is similar, except the pre-signed URL string is dropped into a web viewer object.

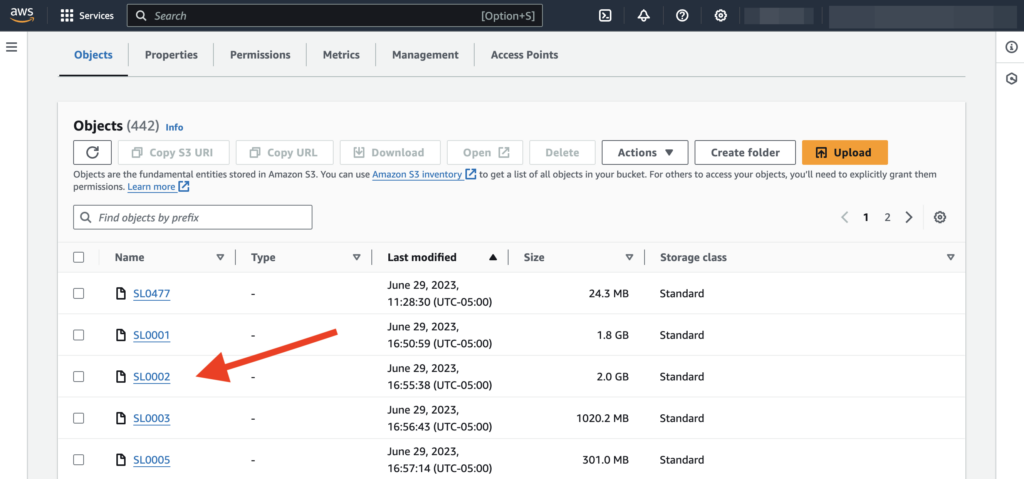

These screenshots show how the file storage looks within the AWS S3 bucket, and how it’s related within the FileMaker layout. Previously, when this 2GB video was stored within FileMaker containers, it would take several minutes to load and play back, if it didn’t freeze up in the process. Now the client just needs to click Load Video and they can see and play the video within seconds.

Pricing Considerations

As noted above, AWS S3 is very low cost for the amount of storage likely to be used in FileMaker solutions. One of our clients now using S3 saw a dramatic drop in their monthly FileMaker cloud hosting costs, about 300% lower by moving their approximately 800 GB of container data to S3.

Are You Ready to Free Up Some Time?

- Are you dealing with slow response times when uploading or downloading data from your container fields?

- Are you looking to reduce your hosting costs by reducing the size of your database?

We can help lighten the load your solution is carrying by incorporating storage with Amazon S3.

Our clients who have moved forward with this integration are reporting faster response times and are much happier with the process of uploading and downloading their images and data. We’ve saved them time, alleviated a lot of frustration, and made their solutions more enjoyable and efficient to work with each day.

We’d love the opportunity to bring more peace to your work days as well. Schedule a call with us to get started.

About The Author

Joe Ranne’s creative approach to development, friendly personality, and years of experience in IT and graphic design make for a skilled developer who strives for customer satisfaction. Joe hails from the University of Nebraska at Omaha with backgrounds in Business Administration and Music Composition